I've always looked for a web analytics solution that respects user privacy and doesn’t slow down my website. The default option for most people is Google Analytics – it’s popular, but in my opinion, it’s bloated and far from privacy-friendly.

Many analytics platforms aren’t GDPR-compliant and often require cookie consent banners, which can hurt the user experience. On top of that, relying on third-party services can negatively affect your site’s performance – just like Google Analytics does.

So why do we keep using third-party tools to collect visitor data when we can host our own analytics and truly own our data?

Sure, some platforms offer deep insights, but unless your business relies heavily on those insights, all that detail isn’t always necessary.

In this guide, I’ll show you how to self-host Umami – an open-source analytics tool that’s fast, privacy-friendly, and easy to host.

Umami No Longer Supports MySQL

As of Umami v3, MySQL is no longer supported.

In previous versions of this guide, I used MySQL as the database backend.

If you installed Umami following those steps, don’t worry – you can migrate your existing data to PostgreSQL, which is now the only supported database engine.

If you’re new to Umami, simply follow this guide from the beginning – it now covers installation with PostgreSQL (the only supported database going forward).

Why Choose Umami?

After using Google Analytics for a couple of months to track my website’s traffic, I just stopped. I said to myself, nah, there has to be a better solution.

It made my website feel heavy and sluggish. I had to add a cookie banner just to stay compliant, and honestly, that hurt the user experience. People would land on my site, see the banner, and bounce – probably never to return.

I’ve always wanted to build a site that feels fast, clean, and respectful. So why should I ruin the experience just to collect some traffic stats?

Eventually, I came across Pirsch.io – a solid, privacy-friendly tool that doesn’t use cookies and doesn’t require consent banners. I used it for a while and liked it.

But if you know me… I’m the self-host guy. I wanted full control – my server, my data, my rules.

That’s when I found Umami. It anonymizes visitor data to protect privacy, doesn’t use cookies (so no annoying cookie banners), and since it’s self-hosted on your own infrastructure, your data stays entirely in your hands.

The best part? Its tracking script is only 2KB in size – practically nothing! Google Analytics used to slow down my site’s page load time by around 500ms.

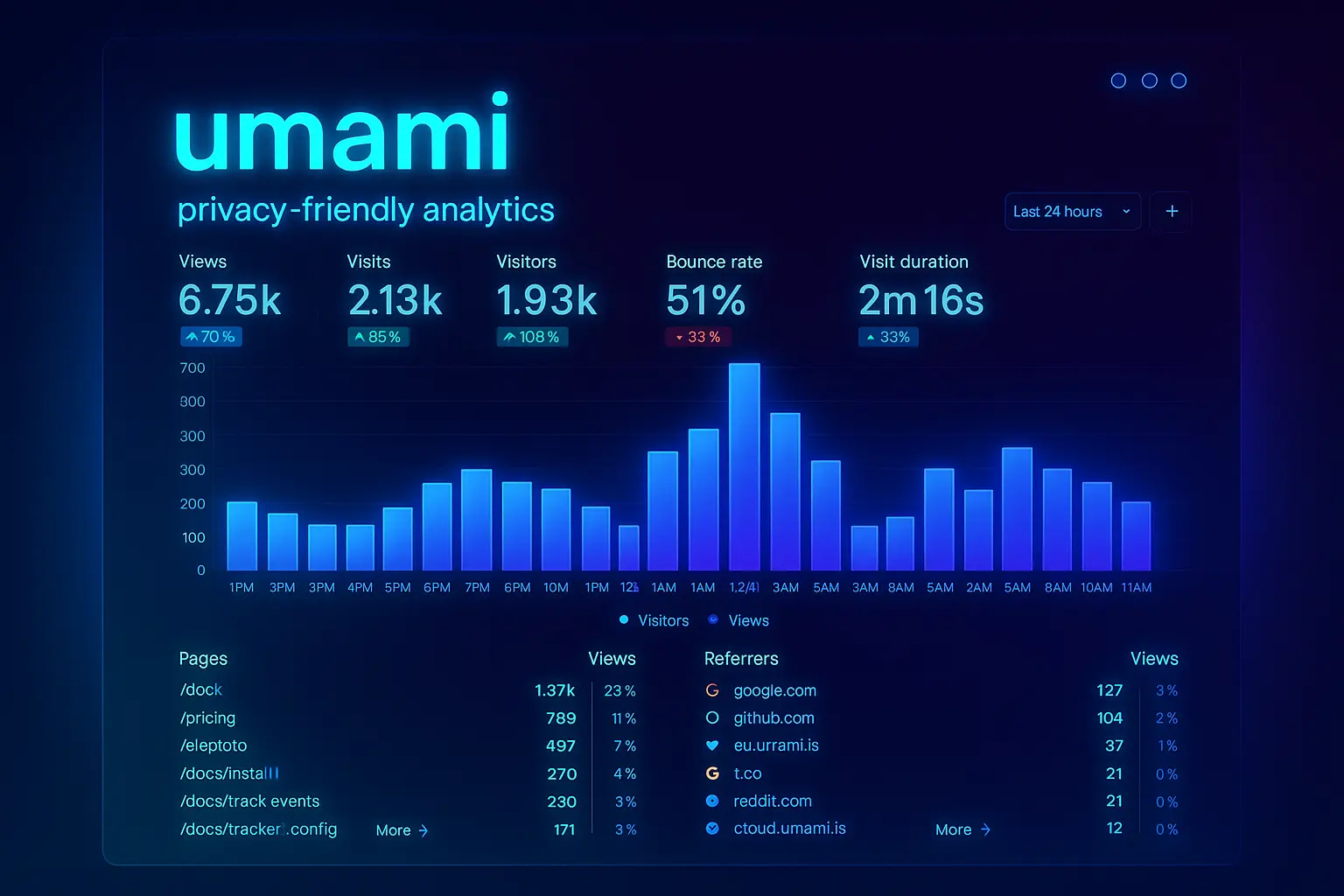

On top of that, it has a fantastic UI – easy to navigate, simple to understand, and quick to find exactly what you need. It also comes packed with features like reporting, comparison tools, filtering, custom events, team collaboration, and much more.

Requirements Before You Start

To follow this guide, you’ll need a server running Ubuntu 24.04 LTS, prepared for installing Umami. I personally recommend using Hetzner – it's reliable and affordable.

Make sure you’ve set up DNS records for the domain or subdomain you plan to use for accessing Umami’s web interface:

- Add an A record pointing to your server’s IPv4 address.

- If you’re using IPv6, also add an AAAA record.

I always recommend running self-hosted projects on the main hostname (like umami.yourdomain.com) if that project is the only thing hosted on the server – which is the case here. I don’t recommend running Umami alongside other services on the same server. Instead, always opt for one server per project when possible. It keeps things clean, reduces conflicts, and makes troubleshooting easier.

You can enable protection for your server’s primary IP addresses in the Hetzner dashboard to ensure they’re preserved even if the server is deleted.

This way, when restoring Umami on a new server, you can reassign the same IPs – avoiding the need to update any DNS settings.

The only major requirement for installing Umami is having Docker Engine and the Docker Compose plugin installed on your server.

Umami doesn’t come with an automatic installation script – instead, we’ll create a docker-compose.yml file and define the necessary configuration ourselves.

The official repository ships an example PostgreSQL stack, and I use that as a baseline before layering on my own tweaks.

Installing Umami

Installing Umami is a straightforward process.

First, navigate to your home directory and create a new directory for Umami:

mkdir ~/umami

cd ~/umamiNext, create a docker-compose.yml file inside that directory and add the following configuration:

services:

umami:

image: docker.umami.is/umami-software/umami:postgresql-latest

ports:

- "3000:3000"

environment:

DATABASE_URL: postgresql://${POSTGRES_USER}:${POSTGRES_PASSWORD}@umami-postgres:5432/${POSTGRES_DB}

DATABASE_TYPE: postgresql

APP_SECRET: ${APP_SECRET}

depends_on:

umami-postgres:

condition: service_healthy

restart: unless-stopped

healthcheck:

test: ["CMD-SHELL", "curl -f http://localhost:3000/api/heartbeat || exit 1"]

interval: 5s

timeout: 5s

retries: 5

umami-postgres:

image: postgres:15

environment:

POSTGRES_DB: ${POSTGRES_DB}

POSTGRES_USER: ${POSTGRES_USER}

POSTGRES_PASSWORD: ${POSTGRES_PASSWORD}

volumes:

- ./umami-postgres-data:/var/lib/postgresql/data

restart: unless-stopped

healthcheck:

test: ["CMD-SHELL", "pg_isready -d ${POSTGRES_DB} -U ${POSTGRES_USER}"]

interval: 5s

timeout: 5s

retries: 5This docker-compose.yml file defines two services:

umami: the actual Umami app.umami-postgres: the PostgreSQL database that Umami will use to store analytics data.

I mount the database under ./umami-postgres-data. Feel free to rename it, just stay consistent throughout your environment.

You’ll notice the Postgres image is pinned to postgres:15; that matches Umami’s upstream example compose file and keeps the base install aligned with what the maintainers test against. Once Umami officially bumps their reference stack, you can update this version in lockstep.

The services are connected internally by Docker, and depends_on ensures the database is healthy before Umami starts. All sensitive values like database credentials and secret keys are pulled from environment variables using the ${VARIABLE_NAME} format.

You need to create a .env file in the same directory as your docker-compose.yml with the following variables:

POSTGRES_DB=umami

POSTGRES_USER=umami

POSTGRES_PASSWORD=userpassword

APP_SECRET=yourgeneratedsecretkey

REDIS_PASS=redispassword

REDIS_URL=redis://:${REDIS_PASS}@redis:6379That covers both the PostgreSQL credentials and the Redis settings we’ll enable later – feel free to pick stronger values right away so you don’t have to revisit the file.

To generate a secure APP_SECRET value, you can run:

openssl rand -base64 32Copy the output and paste it as the value for APP_SECRET in your .env file.

!, @, #, or & in your PostgreSQL password, as it can sometimes cause issues when parsed inside Docker or PostgreSQL.Once your docker-compose.yml and .env files are ready, you can start Umami by running the following command inside the same directory:

sudo docker compose up -dThis will pull the necessary images, start the containers in the background, and get Umami up and running on port 3000.

You can now check if everything is running properly with:

sudo docker psThis will list all running containers. You should see both the umami and umami-postgres services listed and marked as Up.

Note that the container names may look different from the service names you used in the docker-compose.yml. That’s because the Docker Compose plugin generates container names using this pattern:

<project-name>-<service-name>-<index>Here’s a quick breakdown:

| Part | Value |

|---|---|

project-name |

umami (folder name) |

service-name |

umami, umami-postgres |

index |

1 (first container) |

So if your project folder is named umami, your container names will likely be:

umami-umami-1umami-umami-postgres-1

If something isn’t working or you want to check what’s going on behind the scenes, use:

sudo docker logs <container_name_or_id>You can use either the container's name or its ID to check logs for errors or issues.

You can now access Umami’s web interface by visiting:

http://your_server_ip:3000Log in using the default credentials:

- Username:

admin - Password:

umami

Reverse Proxy Setup

We definitely don’t want to access our web interface using a non-secure connection and our server’s IP address. Instead, we want to use our server’s hostname over a secure HTTPS connection.

To achieve that, we’ll set up a reverse proxy.

You can use any reverse proxy you're comfortable with (like NGINX), but in this guide, we’ll use Caddy. It's a lightweight, modern web server that's easy to configure – and best of all, it handles SSL certificates automatically using Let's Encrypt.

Install Caddy with:

sudo apt install caddyCaddy’s config is deceptively simple. Start by opening the config file:

sudo vim /etc/caddy/CaddyfileClear out the default contents and paste in the following config (replace umami.yourdomain.com with your actual domain):

umami.yourdomain.com {

reverse_proxy localhost:3000

}Before restarting Caddy, make sure your firewall actually lets ports 80/443 through; otherwise, Let’s Encrypt can’t validate the certificate:

sudo ufw allow 80/tcp

sudo ufw allow 443/tcpWith the rules in place, restart Caddy:

sudo systemctl restart caddyCaddy will automatically fetch an SSL certificate for your domain and start proxying traffic to your Umami container running on port 3000.

Restrict Direct Access Port 3000

By default, Docker exposes Umami on port 3000, which means it can be accessed directly via your server's IP (http://your_server_ip:3000).

To enhance security and ensure all traffic goes through your reverse proxy (Caddy), you should restrict Umami to listen only on localhost.

In your docker-compose.yml, change the port binding from:

ports:

- "3000:3000"To:

ports:

- "127.0.0.1:3000:3000"This ensures Umami is only accessible from inside the server (by Caddy), and blocks external access on port 3000.

Then, from inside your Umami project directory, restart your Docker containers:

sudo docker compose down

sudo docker compose up -dUmami is now securely hidden behind your reverse proxy.

Enabling Redis

Umami supports Redis as a caching layer to improve performance and handle login authentication.

When enabled, Redis caches frequently accessed data like website lookups, reducing database load and speeding up responses. It also replaces JWT tokens with Redis-based session management, providing a more efficient and secure way to handle user sessions.

To enable Redis, we need to edit our docker-compose.yml file to include Redis as a service and connect Umami to it.

First, add a new environment variable under the APP_SECRET variable:

REDIS_URL: ${REDIS_URL}This tells Umami where to find Redis.

If you followed the earlier .env snippet, you already have the Redis variables in place. Otherwise, add the following lines now (and replace redispassword with something strong):

REDIS_PASS=redispassword

REDIS_URL=redis://:${REDIS_PASS}@redis:6379REDIS_PASS is the single source of truth for both the container and Umami’s connection string, so you only have to set the password once.

Now, add Redis as a service to your docker-compose.yml file. This will run Redis as a container alongside Umami. Place this Redis service definition at the end of the file:

redis:

image: redis:latest

command: redis-server --requirepass ${REDIS_PASS}

volumes:

- ./redis-data:/data

restart: unless-stopped

healthcheck:

test: ["CMD", "redis-cli", "-a", "${REDIS_PASS}", "ping"]

interval: 5s

timeout: 5s

retries: 5Also, in your Umami service section, add Redis as a dependency to ensure Umami waits for Redis to be ready before starting. Under depends_on, include:

redis:

condition: service_healthyAfter updating the docker-compose.yml file, apply the changes by restarting your containers:

sudo docker compose down

sudo docker compose up -dVerify that all containers are running:

sudo docker psTo check that Redis is working and accepting connections, enter the Redis container (substitute the same password you set in REDIS_PASS):

sudo docker exec -it umami-redis-1 redis-cli -a "${REDIS_PASS}"Inside Redis CLI, test the connection:

pingIf Redis is working, it will respond with:

PONGFinally, to confirm Umami is connecting to Redis, you can monitor Redis activity:

sudo docker exec -it umami-redis-1 redis-cli -a "${REDIS_PASS}" monitorThen visit your Umami web interface, and you should see Redis commands appear, confirming that Redis is handling caching and session management.

Installing Updates

To upgrade to the latest version, simply pull the updated image and restart your containers.

Navigate to your Umami project directory and pull the latest image for the PostgreSQL build:

sudo docker pull docker.umami.is/umami-software/umami:postgresql-latestRecreate your containers using the updated image:

sudo docker compose down

sudo docker compose up -dUmami will apply any necessary database migrations automatically on startup.

If you’re still running the legacy MySQL stack, skip down to the migration section before pulling any new images.

Your data will remain safe, and the update process typically takes just a few seconds.

Disaster Recovery

The final step in setting up your self-hosted analytics platform is planning for disaster recovery.

Think about what you’d do if something goes wrong – like a server breach, a misconfiguration, a bad update, or even something out of your control, like a fire in your provider’s data center. You need a way to quickly bring Umami back online without losing your analytics data.

We already have our primary IPs secured (assuming you have enabled protection for them) – they stay with us no matter what, which is great. Now, we need a reliable way to restore the server and reassign those same IPs. The best way to do this is by using snapshots.

If you're using Hetzner, you can go to your server’s Snapshots tab and create one. A snapshot is a full backup of your server’s disk at that point in time.

If disaster strikes, you can spin up a new server from that snapshot, assign the primary IPs, and it should work right away – no need to change any DNS settings or reconfigure Caddy.

Make sure to test this recovery process at least once so you’re confident it works.

Snapshots are created manually. If you want automatic backups, you can enable Hetzner's backup feature. It costs 20% extra, but it keeps daily backups for a week. Once the week is over, old backups are replaced by new ones. You can convert any of these backups into a snapshot, which you can then use to restore your server.

Migrating from MySQL to PostgreSQL

It took me two days to finish this section!

Umami made it unnecessarily hard for Docker users to migrate – just because they didn’t want to spend some time writing a proper walkthrough.

Their official page barely covers the migration and even that doesn’t work out of the box.

What you’ll do (high level):

- Spin up a new Umami stack on PostgreSQL using Umami v2.19.0 (the last v2 release).

- Import your data from the old MySQL instance.

- Verify everything on a temporary port (3001).

- Switch the image to v3 and cut over to port 3000.

Why v2.19.0 first?

You migrate the data into a clean v2 database, verify, and then upgrade the app to v3. This avoids schema surprises during the import.

First, create a new directory for the PostgreSQL setup:

mkdir umami-postgresql

cd umami-postgresqlInside that directory, create two files: .env and docker-compose.yml.

Open .env and add the following:

POSTGRES_DB=umami

POSTGRES_USER=umami

POSTGRES_PASSWORD=password

APP_SECRET=yourgeneratedsecretkey

REDIS_PASS=redispassword

REDIS_URL=redis://:${REDIS_PASS}@redis:6379Open docker-compose.yml and add the following:

services:

umami:

image: docker.umami.is/umami-software/umami:postgresql-v2.19.0

ports:

- "3001:3000"

environment:

DATABASE_URL: postgresql://${POSTGRES_USER}:${POSTGRES_PASSWORD}@umami-postgres:5432/${POSTGRES_DB}

APP_SECRET: ${APP_SECRET}

REDIS_URL: ${REDIS_URL}

depends_on:

umami-postgres:

condition: service_healthy

redis:

condition: service_healthy

restart: unless-stopped

healthcheck:

test: ["CMD-SHELL", "curl -fsS http://localhost:3000/api/heartbeat || exit 1"]

interval: 5s

timeout: 5s

retries: 5

umami-postgres:

image: postgres:15

environment:

POSTGRES_DB: ${POSTGRES_DB}

POSTGRES_USER: ${POSTGRES_USER}

POSTGRES_PASSWORD: ${POSTGRES_PASSWORD}

volumes:

- ./umami-pg-data:/var/lib/postgresql/data

restart: unless-stopped

healthcheck:

test: ["CMD-SHELL", "pg_isready -U ${POSTGRES_USER} -d ${POSTGRES_DB}"]

interval: 5s

timeout: 5s

retries: 5

redis:

image: redis:latest

command: redis-server --requirepass ${REDIS_PASS}

volumes:

- ./redis-data:/data

restart: unless-stopped

healthcheck:

test: ["CMD", "redis-cli", "-a", "${REDIS_PASS}", "ping"]

interval: 5s

timeout: 5s

retries: 5We’re spinning up a new Umami instance running on port 3001 so we can check the migration before replacing the old one.

This setup uses the last MySQL-compatible Umami version (v2.19.0).

Run the containers:

sudo docker compose up -dThen open your browser and go to:

http://yourserverip:3001If it loads, you’re good.

Now stop both Umami (app) containers (not PostgreSQL or MySQL or Redis):

sudo docker ps

sudo docker stop <umami-container-name>From the old MySQL docker directory, run:

docker exec -i <mysql-container-name> \

mysqldump -h 127.0.0.1 -u root -p'YOUR_OLD_ROOT_PASSWORD' \

--no-create-info --default-character-set=utf8mb4 --quick --single-transaction --skip-add-locks \

umami > umami_mysql_dump.sqlMake the dump Postgres-friendly:

# 1) MySQL backticks -> Postgres double quotes

sed -i 's/`/"/g' umami_mysql_dump.sql

# 2) MySQL-style escaped quotes -> Postgres-style

sed -i "s/\\\\'/''/g" umami_mysql_dump.sqlCopy the dump file into the new Postgres container:

# back in the new umami-postgresql directory

sudo docker cp umami_mysql_dump.sql <postgres-container-name>:/tmp/dump.sqlClear two internal tables (per Umami’s guidance):

sudo docker exec -it <postgres-container-name> \

psql -U <postgres-user> -d umami \

-c 'TRUNCATE TABLE "_prisma_migrations", "user";'Import (temporarily relaxing foreign keys during the load):

sudo docker exec -i <postgres-container-name> bash -lc '

psql -U <postgres-user> -d umami -v ON_ERROR_STOP=1 <<SQL

SET session_replication_role = '\''replica'\'';

\i /tmp/dump.sql

SQL

'Start Umami again:

sudo docker start <umami-postgres-container-name>Open http://yourserverip:3001 and log in with your existing Umami credentials from MySQL. If websites don’t appear, make sure you’re logged in as the user who owns them.

Now, edit your docker-compose.yml to use the latest image and restore the local binding:

image: docker.umami.is/umami-software/umami:postgresql-latest

ports:

- "127.0.0.1:3000:3000"Back in your old Umami directory:

sudo docker compose down -v --rmi local --remove-orphans

sudo rm -rf ./umami-db-data ./redis-dataThose folder names match the original MySQL-era guide (umami-db-data). If your old stack used a different directory – say, ./umami-mysql-data – swap the paths accordingly so you don’t delete the wrong data.

Then, back in your new Umami directory:

sudo docker compose up -dYour Umami instance is now fully running on PostgreSQL – finally, the way it should’ve been documented in the first place.

Conclusion and Final Thoughts

With your Umami instance now fully deployed and secured behind a reverse proxy, you have complete control over your analytics.

From here, you can now start adding websites, creating new teams, setting up custom events, and exploring your analytics dashboard – all from your own infrastructure, fully in your control.

You’ve built a powerful, privacy-friendly analytics platform – no third-party trackers, no compromises.

And just as important – test your disaster recovery plan regularly to make sure everything still works as expected. It’s better to catch issues during a test than during a real outage.

If you run into any issues or need further help, feel free to revisit this guide or reach out for assistance.

If you found value in this guide or have any questions or feedback, please don't hesitate to share your thoughts in the discussion section.

Discussion